I’m an Elon Musk fanboy, drive a tesla and own tesla stock, I’m a true believer. One of the things I like about the man is the way he does everything in reverse, when it comes to efficiency and optimization. The attitude of most people is

‘This thing runs at 10m/s. How can we make it run at 12 m/s?‘

Whereas Elon takes the opposite view:

‘Are there any laws of physics that prevent this running at 100,000m/s? if not, how do we get it to do this?’

This is why he makes crazy big predictions and sets insane targets, most of which don’t get met on time, but when they do, its pretty phenomenal. If the next falcon heavy launch recovers the center core too, thats even more game changing, and right now, the estimate is that the falcon heavy launch cost is $90 million verses $400 million of its nearest competitor (which only launches half the weight anyway). That not just beating the competition, but thats bludgeoning them, tying them up, putting them in a boat, pushing the boat out into the middle of the lake, and laughing from the shore during a barbecue as the boat sinks.

When it comes to my favorite topic (car factory efficiency, due to me making the game Production Line), he comes out with even crazier targets.

“I think we are … in terms of the extra velocity of vehicles on the line, it’s probably about, including both X and S, it’s maybe five centimeters per second. This is very slow,” he said. Musk then added he was “confident” Tesla can get a twentyfold increase of that speed.”

Now we can debate all day long whether the guy is nuts, and over promising and whether or not we could ever, ever get a production line that fast, but you have to admire the ambition. You dont get to create privately-made reusable rockets without ambition. I just wish we had the same sort of drive in software as he has for hardware. The efficiency of modern software is so bad its frankly beyond embarrassing, its shameful, totally and utterly shameful. let me dredge up a few examples for you.

I’m running windows 10, and just launched the calculator app. Its a calculator, this is not rocket science. A glance at task manager shows me that its using up 17.8MB of RAM. I am not kidding, try it for yourself. I’m pretty sure that there was a calculator app for the sinclair ZX-81 with its 1k (yes 1k) of RAM. Sure, the windows 10 app has…err nicer fonts? and the window is very slightly translucent… but 17MB? We need 17MB to do basic maths now? As I type this, firefox has got 1,924MB of RAM assigned to it, and is regularly hitting 2% of my CPU. I’m just typing a blog post, just typing… and thats 2% of a CPU that can do 49,670 MIPS or roughly 50 BILLION instructions per second. Oh…we have slightly nicer fonts too. wahey?

I’d wager the percentage of people coding games who have any real idea how the underlying engine works is tiny, maybe 5%, and of those maybe 1% understand what happens at a lower level. Unity doesn’t talk to your graphics card, it does it through OpenGL or DirectX, and how many of us really understand the entire code path of those DLLS? (I don’t) and of those, how many understand how the video card driver translates those directx calls into actual processor instructions for the card hardware? By the time you filter your code through unity, directx and drivers, the efficiency of what actually happens to the hardware is laughable, LAUGHABLE.

We should aspire to do better, MUCH better. Perhaps the biggest obstacle is that most of us do not even know what our code is DOING. Unless you have a really good profiler, you can easily lose track of what goes on when your game runs, and we likely have zero idea what happens after our instructions leave our code and disappear into the bowels of the O/S or the graphics API. Decent profilers can open your eyes to this stuff, one that can handle displays of each thread and show situations where threads are stuck waiting is even better. Both AMD and nvidia provide us with tools that let us step through the rendering of individual frames to see how each pixel is rendered, then re-rendered and re-rendered many times per frame.

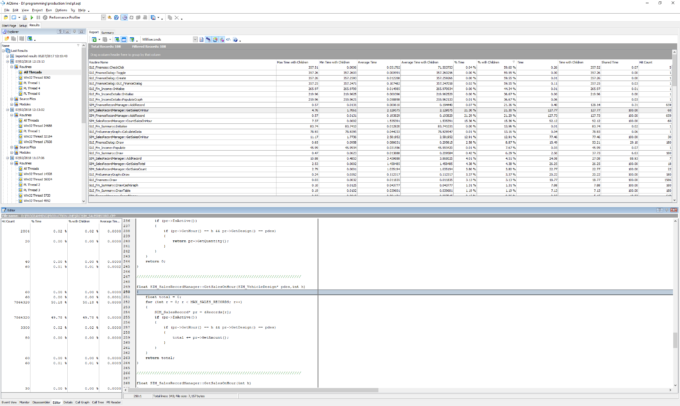

If you want to consider yourself not just a hacker but an ENGINEER, then you owe it to yourself, as a programmer to get familiar with profilers and code analysis tools. Some are free, most are not, but they are a worthy investment. Personally I use AQTime, and occasionally intel XE Amplifier, plus the built-in visual C++ tools (which are a bit ‘meh’ apart from the concurrency visualizer). I also use nvidias nsight tools to keep an eye on graphics performance. None of these tools are perfect, and I am by no means an especially good programmer, but I am at the very least, fully aware that the code I write, despite my efforts, is nowhere REMOTELY close to as efficient as it could be, and that there is plenty of room to do better. If Production Line currently runs at 60FPS for you (average speed across all players is 58.14) then eventually I should be able to get it so you can play with a factory at least 10x that size for the same frame rate. I just need to keep at it.

I’ll never be the Elon Musk of software, but I’m trying.