Ok, so you have be over 40 to know what the song is or who this guy is…

But anyway… deprecation gets me down. What is deprecation?

http://en.wikipedia.org/wiki/Deprecation

“In the process of authoring computer software, its standards or documentation, deprecation is a status applied to software features to indicate that they should be avoided, typically because they have been superseded”

Sounds reasonable enough but it’s a real pain. I discover today that directinput is ‘deprecated’. presumably Microsoft declared it thus, whilst also being the people who wrote it, and also writing a ton of other stuff that I’m probably not supposed to use anymore, despite the fact that when they released it, they tell everyone it’s the best thing EVER, and we are all luddites for not switching to it.

I really cannot keep up with what is right, and modern and usable and standards-compliant etc, and what is old, unsupported and unhip.

Is C# still cool? what about GDI+ did that even happen? What about DirectX Graphics? or maybe .NET? do people use Java still? and is it OK to use flash? or is it now HTML 5? and is Ruby On Rails new or old now?

I reckon a game developer needs to write at least three whole games using a technology before they really are on top of it. I’m on top of directx9 now, and feel quite confident about it, but I’m pretty sure some genius at Microsoft reckons I should be shot for not using directx 10, or is it 11 or 12 now? or is directx not even used now? How are programmers supposed to keep up? It’s fine if your job is something cushy like ‘engine architect’ where you just go to Microsoft conferences and read technical papers all day, but for people who have to ‘ship an actual product’, we actually have other stuff to do too.

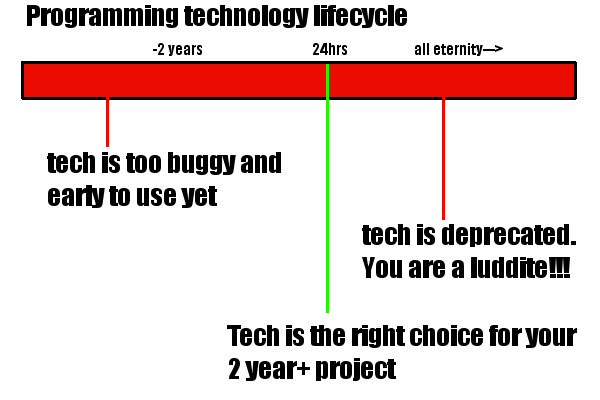

Is it just me? what technology have you finally sat down to use, or ordered a book for, only to discover that all the cool hip kids (ones who never tend to ship anything) already think it’s out of date? I reckon the lifecycle of programming technology goes like this:

Bah…